Published Monday, 19. September 2011, 00:37, Danish time.

Handling client-side state in round-trip based web app can be fairly tricky. The tricky part is when and how to save changed state during the entire session the user has with your server and how to synchronize state seamlessly between the client and the server along the way without slowing down your pages. The statelessness of the web will most probably challenge you now and then.

There are a number of approaches and techniques that can help you and they all have their sweet spots, but also built-in downsides and often unwanted side effects. As in a lot of other areas in software development, it is often important to know much data, you need to handle and what kind of security constraints, your solution should work under.

Classic approaches to handle state across round-trips

In order to handle state in you application, there are a number of classic techniques you can use, each with their own built-in pros and cons. None of them are perfect, as you will see. Below is a brief discussion on pros and cons for the various techniques.

This section of the post turned out to be fairly dense, but I think it’s important and you can really get into trouble, if you don’t know these things.

Here goes:

- Storing state data in cookies was the original solution to the problem. It’s used on almost every web site out there for storing user ids and session ids.

Pros: (1) good and easy for small amounts of data – 4K is considered the limit, generally, but you should never go that far, (2) data can be encrypted by the server – but is then of course inaccessible to the client-side code. Cookies is also where the server stores user- and session ids between requests in all frameworks I know of. (3) Data is personal and bound to the user and the pc he is logged in to.

Cons: (1) cookies can be stolen by malicious software, (2) the browser submits the cookies related to a given hostname in any request to the server, including when requesting .jpg, .css and .js files, so you will hurt performance, if your cookies are to big.

- State data can also be stored in hidden HTML input fields.

Pros: (1) This way, you can store data in a way that is invisible to the user. (2) If the field is part of a form, its content is automatically submitted as part of the form, when the user submits his input user bookmarks your page, the current state is not saved with the bookmark.

Cons: (1) Transporting the state back to the server, requires that the page is posted back to the server (for instance by the user pressing a submit button) or that some client side code reads the fields and send them. (2) If the data is not encrypted (and it’s not, unless you do it), it can be manipulated easily by a hacker, so you will have to validate it (and you should do that on every round-trip). You should of course validate the incoming data anyway before you stick anything in your database. (3) Remember, that post-backs does not “play well with the web”, because it’s not “back button compatible” (see below). (4) If the user bookmarks your page, the current state is not saved with the bookmark.

- A variant of storing data in hidden fields is ASP.NET WebForms’ hidden field named ViewState. It’s used by internal WebForms logic to store the state of the view, which in this case is select properties from controls in the page and also a few other things.

ViewState has the same pros and cons as normal hidden fields, but makes things worse by encrypting data which makes it grow in size. If you develop using the built-in grid components and don’t watch out, you can easily end up with a page weight of say 300K or the like. Then your stuff is suddenly only suited for Intranet usage. This has caused many WebForms developers to spend lots of time on fine tuning pages by disabling ViewState on individual controls in their pages. To be fair, Microsoft did put a lot of effort in improving this in .NET4, but you are still stuck with the post-back requirement. It should also be noted, that it’s fairly easy for a hacker to open up the encrypted ViewState and start experimenting with application’s internals.

- You can also store state data in all links in your page as query string parameters. This will cause the browser to send the parameters to the server when the user clicks a link. This method has been used a lot by big sites like Amazon and EBay.

Pros: Simple and robust solution, if you can live with the cons and with the fact that your state is public and easily changeable. In Amazon’s case, where I assume they just use it to determine, say, which sidebar ads they want to display on the next page, it is hardly a big problem, if somebody experiments with the values.

Cons: (1) Results in ugly URLs. (2) Has the same performance downsides as described for cookies because the browser also sends the URL of the referring page to the server in an HTTP header as part of every request. Actually, this will cause the browser to send the state data twice when you click a page link. (3) Web servers generally “only” support URLs up to a size of 2K characters. (4) It’s a lot of work to “patch” every link in the page to include all the state data. Functions for this is not standard anywhere, I think (– at least not in ASP.NET). (5) It’s easy for the user to start hacking on your site by messing around with parameters. (6) Foreign web site will get a copy of your state values, if you link or redirect to somewhere else. (7) Client-side code cannot easily interact with these parameters.

- Fragment identifiers are are a lot like the above query string parameters with regards to pros and cons. Fragment identifiers are the part of the URI that is after a “#” (hash mark). Like “Examples” at the end of: http://en.wikipedia.org/wiki/Fragment_identifier#Examples. The original idea behind it to allow the user to jump quickly between internal sections of a web page, and hence the browser doesn’t request a new page from the server when the user clicks local fragment identifier links on a page. This also means that communication with the server involves sending data using client side code.

Widespread use of the fragment identifier for keeping state is fairly new and almost exclusively used web apps with large amounts of client side logic, so I wouldn’t really consider it a classic technique for storing state, although it has been doable for a lot of years. I consider this technique one big, clever hack, but it works beautifully and I think that we will see this everywhere in the near future.

- Last, but not least, you can use server side storage, like ASP.NET’s Session State. This is usually a key-value table that resides in memory or is stored in a database on the server-side.

Pros: (1) virtually unlimited storage compared to the above methods. (2) Only a small identifier is transported back and forth between the browser and the server – usually this a session key in a cookie.

Cons: (1) It doesn’t scale well. Letting users take up extra memory means less users per server, which isn’t good. (2) If you stick with the in-memory solution, you will get server affinity, meaning that your user will have to always return back to the same server to be able to get to his data. If your server sits behind a load-balancer, you must use “sticky sessions”. (3) If/when you server crash or is restarted, you loose your data and kick all your users on that server off. (4) These two cons can be avoided by storing session data in a database, but then you pay by loading and saving data from and to the database on every request, which is generally considered “expensive”. (I wonder if not the new, high-speed no-SQL databases, like MongoDB will change this picture soon.) (5) You can’t keep these data forever and will have to delete them at some point. On ASP.NET, the default expiration time for session data in memory is 20 minutes (less than a lunch break). You will turn some users down no matter which expiry you set. If you stick your session data in a database, you will have to run clean-up jobs regularly. (7) Again: client-side code cannot easily interact with these parameters.

Phew. There is a lot to consider here! As I said, I find it important to know both pros and cons, in order to be able to make right architectural decisions.

REST – specifying everything in the request

It’s perhaps appropriate to also mention REST in this post, because it gets a fair amount of buzz these days (even though it’s been around since 2000). And because is about managing state. REST means REpresentational State Transfer.

When evaluating REST, it’s fair to say, that it has the same pros and cons as I listed with query string parameters above (- although REST is using more transport mechanisms than just query strings). The reason is, that one of REST’s novel goals is to make the server stateless, in order to gain scalability. And making the server stateless, of course means that the client must send all relevant state to the server in every request.

So, if you are dealing with complex application state, REST in combination with round-trips will most probably make your requests too heavy and your app won’t perform well. It is however very usable if your app hasn’t got a lot of client-side state to handle. And it’s also a very good way for a “fat” client to communicate to the server.

REST is however a very sound architectural style and it would pay off for most web developers to know and use it everywhere it’s applicable. In the .NET world, REST has not yet caught on well. It’s a pity, but I think it will. It should; it captures the essence of the web and makes you’re your app scalable and robust.

Future options for keeping state on the client side

In the future you can save state on the client side, but it will take years before this is widespread. Google pioneered this with Google Gears, which gave you an in-browser, client-side database and for example enabled you to use Gmail while you were disconnected. This was a good idea and it has now been moved in under the HTML5 umbrella, so the old Gears is now deprecated and will disappear from the web Dec, 2012. I should also mention, that similar features exists in both Silverlight and Flash, which should be no surprise.

What HTML5 will bring, is a number of options:

I won’t go into these at this time; the browser vendors haven’t agreed on these yet and as with other HTML5 technologies, it’s still on the “bleeding edge”.

Also: if you plan to store data more or less permanently on he client-side, you better have a plan for a very robust data synchronization mechanism that must kick in, when the same user uses your app from many different devices, as well as robust protection against evil, client-side tinkering with your app’s state.

Conclusions, perspective and “going client/server”

It’s important to recognize that, as a web app developer, you inherit intrinsic problems from the web’s architecture and dealing with state across round-trips is one of them.

A lot of different methods for dealing with this has been developed over the years and all of them has their built-in downsides. Hence, you must choose wisely. One of the above methods might be just what you need. Or might not.

If you need to deliver a really sophisticated UI, then my take is that basing your solution on the round-trip model won’t cut it. The web was not made for sophisticated UIs, so the classic toolbox doesn’t cut it.

There are ways to get around the limitations however: you simply don’t base your solution on round-tripping. You “go client/server” and create a full fletched JavaScript app that lives on a single web page (or a few perhaps). But that’s an entirely different story… and it will have to wait until another time.

While researching links for this post, I came across this MSDN article that seems like a good read: Nine Options for Managing Persistent User State in Your ASP.NET Application. Especially if you work on the ASP.NET stack.

1aeacfdb-fca7-4f7c-8722-2978d8e4c06f|0|.0

Published Thursday, 8. September 2011, 19:41, Danish time.

Originally web application technologies was designed around the notion of round-trips. The web was originally made for hypertext with the possibility for some rudimentary input forms and developers could deliver solutions to simple input scenarios fairly easily. Things like sign-up on a web site, add-a-comment functionality, entering a search and so forth was easy to realize. You also had cookies for maintaining state across round-trips. Only much later scripting languages was added for a greater degree of interactivity.

Common knowledge. The web was meant to be a mostly-output-only thing.

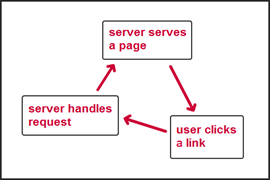

For application development, the model was basically the same as for hypertext sites:

- the server serves a page,

- the user interacts and clicks something or submits some input

- the server serves a new page or redirects

It is still like this today: if you can live with the limitations in this – very – primitive model and state storage, building sites is still easy and have been for many years.

But this simple model is not enough. Real application development requires a lot more and can also be easy – as long as you stick with what the tools were meant for.

Web frameworks knows about round-tripping…

Modern server-side web frameworks like Ruby On Rails and ASP.NET MVC was designed to play along very well with the web’s round-trip model. The basic stuff is taken care of; mapping of incoming requests to some logic that handles them, map incoming URL parameters and posted user input automatically to parameters in method calls in your code. You then do your processing and return an updated page or send the user off to somewhere else. These tools take care of all the nasty details: handling the HTTP protocol, headers, cookies, sessions, posted values, encoding/decoding and more.

Also Microsoft’s older ASP.NET WebForms framework is easy to use and works fairly well for these kinds of solutions, although I would argue that it’s best suited for Intranet sites. WebForms was directly designed to enable Windows developers to develop apps as they were used to, to enable component based development and to try to hide the complexity in web development. This (again) works well as long as you don’t try to “bend too many water pipes” and just use it for what it was designed for (and if you are careful with those grid components). There are, however, a number of design issues and I will explain one of them in a bit.

In general, challenges start when you want to go beyond that simple round-trip model. What typically happens is, that you want a solution that provides a richer client-side experience and more interactivity, so you need to do more in the browser – typically by using plain JavaScript, jQuery or a plugin like Flash or Silverlight. Now the web starts to bite you.

What if the user changes the data and then presses the Back or Close button in the browser? Then his changes are gone and in many cases you can’t prevent it. He could also press Refresh in the middle of everything or loose his Internet connection. Stateless architectures don’t really handle these problems well, so it’s up to the developer to take care of it. You will also be hit harder by different quirks in the many browsers out there.

The problem with repeatedly posted input

One particular problem, that is shared among all browsers, is the issue with “playing nice with the back button”.

This is what happens: after the user has filled out a form, submitted his values and gotten a page back with the updated values, he now presses refresh in his browser. Or he proceeds to another page and then presses back to get back to the one with the updated values. In this case, the browser prompt the user with a re-post warning: is he really sure that he wants to post the same data again? This is almost never his intention, so he of course replies "no" to the prompt. Or he accidentally replies yes and typically ends up in some kind of messy situation.

The average computer user have no clue what is going on. Or what to answer in this situation.

I am pretty sure that the above problems are the historic reason for the prompting is that many sites over time have had too many problems with handling re-post problems; if you bought goods in an Internet shop by posting your order from to the server and then posted the same form again by pressing refresh, you would simply buy the same goods twice. Or your database would be filled with duplicate data. So they just put pressure on the browser vendors and convinced them to do symptoms treatment …

As a side note, my current bank's Internet banking web app simply logs people off instantly - I guess that they were simply afraid of what could happen... Or that their web framework had them painted into a corner of some sort.

So is it really that hard to avoid this problem in code? The answer is no. There are a couple of fairly easy ways:

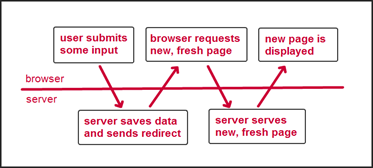

Fix #1: the Post-Redirect-Get pattern

This is a simple and well proven pattern. The solution is, that every time the user has posted some input, you ask the browser to read a fresh and updated page, containing the user’s changes. Instead of doing the default thing and just respond to the post request with some updated page content.

This is known as the Post-Redirect-Get pattern (PRG). The name comes from the sequence: the user POSTs some input for the server, the server saves the posted values and the server responds with a REDIRECT header to the browser, which then in turn issues a GET request to the server in order to load a fresh page, typically containing the user’s newly saved data. By using PRG, the user’s back button behaves nicely by not prompting the user, if he really is sure about his back-button action.

Fix #2: stamping each page with a unique id

This is what you do: (1) stamp every input form, you send to the browser with a unique id (usually a globally unique identifier / Guid), (2) let the user do his thing and submit the form and then (3) before the server stores the input (along with the id), it checks that the id wasn’t stored once before. If the user already stored the the input once, you could show a message and/or send him to a page that displays the saved data in the system.

Besides making sure that the submitted data doesn’t get saved twice, this also adds a nice robustness to your system. A good thing.

I can’t help to think, that if everybody had done like this, we wouldn’t have needed those re-post warnings in our browsers in the first place.

With today’s browser, you of course do get the re-post warning – so you should ideally combine this approach with PRG to get rid of that. But no matter if you do that or not, your database will never be harmed.

ASP.NET WebForms troubles

It’s a testament to how hard it is to get everything to fit, that Microsoft didn’t implement PRG as a default behavior in WebForms, which more often than not has caused WebForms solutions not to play nice with the user’s back button.

Let’s illustrate the design problem by discussing a WebForms page with a sortable grid. Initially, WebForms would normally stick the grid component’s column sorting settings into ViewState (a hidden field in the page), in order to be able to do a compare later. When the user clicks a column header, WebForms would submit the column index or something like that to the server, which then in part would do the compare, carry out the sorting, save the new state of things in the page’s ViewState and return a nice sorted grid to the user. Now at this point, the site has broken the browser’s back button, so if the user wants to refresh the data, he cannot press the browser’s refresh button without getting the re-post warning. Perhaps worse, he also cannot forward a link to the page with the sorted data via email, because the sorting information isn’t represented in the page URL.

Now, one way of solving this, could have been that a click on the column header would redirect to the page itself, but now with a URL parameter containing the unique name of the grid (= the control’s ClientID), the column header index and the sort direction. And Microsoft could have generalized that in some way, but it would result in unbelievably ugly URLs and also introduce a number of other complexities. And you also don’t see other situations where WebForms controls automatically read parameters directly from the incoming GET requests.

So I figure they just decided that it was a no-go. But I of course don't know for sure. What I do know however, is that if you just go with the flow in WebForms, your users will get re-post warnings.

Wrap-up

As you have seen, you easily run into irritating problems, even when you just want to develop fairly straight and simple web apps. It’s often an uphill struggle just to get a simple job done. And that it’s a lot harder than it could have been, had web apps been part of the design of this technology in the first place.

Web technology was originally designed for hypertext output. Not apps. And it shows.

65c38570-b83a-46f5-9658-49e5a1d93fb2|0|.0

Published Tuesday, 2. November 2010, 08:09, Danish time.

Web technology has a lot of constraints and a lot of domain specific “laws of nature” that you must obey, if you want success with your web solutions. I think of these laws of nature as “domain laws” in lack of a better term. The web can be pretty unforgiving, if you don’t take these laws into careful consideration when you design you web app solution.

It takes time to learn these domain laws. For me, it has taken a lots of fighting, creativity and stubbornness, to crack the nuts along the way. And a lot of the learning was done the hard way – by watching apps, logic and naïve design fail when exposed to the sheer power and brutality of the web.

It can be very hard to identify these domain laws. Often it’s an uphill struggle. Lots of technology people will fight for your attention in order to be able to sell their products and services to you – be that web software, operating systems, consulting services or simple platform religion. They would rather prefer you to focus on their product than to understand the domain and get critical. The assumption is of course that a sound web technology will work with the domain laws of the web, saving you from spending time on all this. But the reality is that most products have an old core technology, a wrong design and/or that they lag seriously behind in one or more areas. – Once you have a lot of customers and a big installed base, you don’t rewrite the core of your product – even when the world has moved and you really aught to. That’s just how the mechanics of this works.

This is not to say that you won’t benefit from going with a certain technology or to say they can’t have good things going for them, but just that every product usually has serious quirks in one or more areas, and that these quirks often are tedious to a degree, where it doesn’t pay off to go beyond what the specific technology is good at and meant for.

In my mind this aspect is more important in web apps than in most other kinds of software solutions and the problem here is that web applications are very hard to get right. There are so many factors that work in opposite directions, that getting it right to a large degree is about doing it as simple as you can, by choosing functionality that you can live without and then down-prioritize that in order to have a chance to get all the important functionality right. Or if you prefer it the other way: identify what is really important – and then focus intensely on getting that right.

But even when you focus intensely on choosing the right solutions, there are lots of tradeoffs you have to make. You often have to choose between two evils. Hence, some good discussions on pros and cons are very beneficial, in my mind.

What’s a Web App?

Let’s first limit the scope: in this series,I will only be discussing “web applications”. I define web apps as web sites that are more than just hypertext (text and links) and simple interaction. A good example of a hypertext site is Wikipedia. Hypertext sites are what the web was originally made for – and web technology still work beautifully for these purposes.

Web Apps are also web sites that it doesn’t always make sense to index by search engines. Examples of these are web based image editors, your personal webmail, a web based stock trading app and lots more. In fact, anything personal or personally customized. (- Not that you don’t want search capabilities in these apps, of course.)

Then there are web sites like Facebook, Flickr or Delicious that are somewhere in between hypertext sites and what I define as web apps. For these, search engine indexing and links from and to the outside makes a lot of sense, but at the same time they definitely offer a customized experience for the user. They work fine as quick-in, quick-out sites, but they are not really tools you can use 40 hours a week. Flickr is not Photoshop. Another attribute is that these sites usually feature some kind of publishing process: the user prepares content for the public web and publishes it afterwards. Making content available on the web usually plays a big role in these solutions.

As a contrast “a real app” often doesn’t have that. It is much more concerned about delivering a highly optimized flow for the work, the user uses it for. Think of an accounting app, a development tool or a CRM system – in these the user delivers work and the more they can be customized, the better. With regards to search indexing, it doesn’t make much sense to index a development tool – indexing the programming code and the built-in help system makes sense. But not the rest.

Who is this for?

This content is in the category of articles I never found on the net, when I needed them myself. Maybe it was just me that didn’t know what to search for back then – or maybe it’s just not out there. Anyway: very soon my version will be here.

I figure and hope these posts will benefit other developers that are new to web apps but might be experienced in other areas of software development.

I call the series for a “domain analysis” because I will try to gather all thing web development in one place. The posts will be about both the aforementioned domain laws, the do’s and don't’s in web development and architecture and about the really ugly gotchas, that you can run into in web app development. But I will only include things that I think are very important to know.

Originally I planned for this series to be a single all-in-one post, but when I started writing this thing, it just kept on growing on me. So I decided to split it up into multiple parts. This also makes it easier for me to spend a little more time on each part, while still being easy on myself and not getting all stressed out about it.

Post index and links to posts

I will update this index and it's links, as I get the parts completed.

79544398-2834-4cae-a23b-7b248a8f1b17|0|.0