Published Tuesday, 29. November 2011, 00:26, Danish time.

I am currently spending quite some some time learning (proper) JavaScript. It just so happens that the web is full of really, really great and free resources for doing exactly that: teaching yourself JavaScript (JS). It’s really not surprising when you think about it, because JS runs is in the browser, and JS development is traditionally done for the web.

It often comes as a surprise to .NET- and other server or native app developers just how much the JS community “lives and breathes the web”. So this post is also intended as an easy pointer to give to colleagues and friends who want to know more. The second half of the rationale was that I was looking for all these resources anyway, so just to spit them out in a blog post was not that much extra effort.

I have found my findings to be quite fascinating. I know that I have been blown away more than a couple of times. And in this post, I have collected some of the cool stuff, I have come across.

Where to find good JavaScript & jQuery documentation

The Mozilla Developer Network (MDN) is very good. I usually just google e.g. “mdn string”, which usually will find what I need right away. MDN’s main JavaScript index is at developer.mozilla.org/en/JavaScript/Reference.

For jQuery, it’s just api.jquery.com for the quick lookups. They’ve been nice and already placed the cursor in the search box, so you can just start typing once the page is loaded. Also check docs.jquery.com for reference & general documentation and /Tutorials for – well… Another good jQuery API site is jqapi.com; it’s fast and to-the-point.

Must-watch/read: “Javascript: The Good Parts”

One thing, you should get understanding of up front, is that there are a lot of coding practices, you should avoid in JS. Instead, you should stick with the good parts. Douglas Crockford first talked about this back in 2007. There are videos spread all over the Internet and one of YouTube’s is here. If you prefer, there is also a dead forest edition available.

Crockford’s talking about “the good parts” changed a lot of things. It made it clear, that JS had (has) a lot of nice qualities to it. And that, if you ignore certain language constructs, you can write very nice, understandable and succinct code. Even some of the most basic parts of this language are tricky, like comparing values and the usage of the “this” keyword to provide call context. Crockford shows that JS actually has more in common with functional languages, like List & Scheme, than with Java and other C-family languages (but don’t go crazy; JS doesn’t do tail call optimization).

If you prefer videos, like I do, you could stick around and watch his 8 talks on the matter – it’s more than 10 hours in total, but well worth the time. There is even more talks at the YUI Theater.

Online video-based Javascript and jQuery course

I stumbled across a very nice online video based course at learn.appendto.com. They guide you through all the basic concepts in JavaScript and also in jQuery in parallel.

The videos are very good at pointing out behaviors that are specific to JavaScript, which makes it easy for you to grasp the differences from C# and other static languages.

Be sure to sign up, because then they will track your progress in a nice timeline on the page. I can also recommend to go through the exercises (click the vertical “exercise” text) AND to watch their exercise walk-through on video afterwards: there is lot’s of good knowledge there also. And their small exercise challenges will most probably trick you a couple of times, if you are new to the language. They tricked me for sure.

Play with code on any PC using the browser

It’s so easy to try out JS code. In most modern browsers you just hit F12 (on Windows) to enter the browser’s developer tools, choose the Console and start typing JS. This way, you can easily run code in the context of the current page to test out functions you built. But you can also write unrelated code and execute it. I can recommend spending an hour on the introduction to Google Chrome Developer Tools from Google I/O 2011; they show a lot of stuff, you would normally need an IDE, a debugger and a protocol analyzer to do.

Or just go to the address line in the browser and enter “javascript:”, followed by a code statement.

All in all: there is no need for a sophisticated development tool just to try out some code.

Online workbenches & storage for code snippets

If you want to go a step further, there is plenty of good apps online. In general, they are free and directly accessible – with no credit card or private life secrets needed. Here are my favorites:

JSFIDDLE is a fun little online playground. It’s sort of an interactive IDE and your fiddles (code snippets) can include both HTML, CSS and JS, with a quarter of the screen for each and the last quarter available for running your code and viewing the result. Like in the browser console, you can run your code with ctrl+enter. You can create an account and keep all your snippets saved there. JSFiddle also allows you to embed working snippets in your own page, like this:

Direct link is: jsfiddle.net/codesoul/hGdb5/2/. I noticed some good examples of using in-page fiddles to on Steve Sanderson’s blog and on the KnockMeOut blog.

JS Bin is a bit simpler, but clearly of the same breed. The screen is divided in JS and HTML, with a nice option to show a real-time preview. You can’t create an account, but instead save your code snippet without any further fuzz; the first time you get a short URL, the next time it just adds a version number. You can render/run your code using ctrl+enter, if you’re not using real-time preview. Try my little example here: jsbin.com/akoqow/7/edit

REPL.it. Another nice one. It’s a friendly, very low friction editor page with a REPL on it. Saving snippets works the same way as with JS Bin. Try out my little example here: repl.it/BpU/3 (click the play button to run.it). Repl.it is currently missing a keyboard shortcut for running the code in the left hand editor pane, but on the other hand, the whole point of a REPL is that you work in the command line and here you just hit enter to run your statement or write multi-line statements using shift+enter. Well, I miss it anyway (and wrote to them about it).

Interestingly, REPL.it supports several languages and everything is executed directly in the browser – some of them (like CoffeeScript) are originally implemented in JavaScript, while others (like Python) were compiled from their original C implementations to JS instead of binary files using the Emscripten compiler.

Test your ability to handle the “bad parts”

If you are the type that learns through solving riddles, the Perfection Kills blog have a good challenge for you in the form of an online JavaScript quiz. It exposes all the ugly gotchas. Some are just so tricky, that you will laugh, cry and swear at the same time.

Take your second “exam” passing JavaScript Koans

“Koans” is a concept from the Ruby world, which is highly test driven. The concept is simple and brilliant: a smart programmer creates a good little set of failing unit tests and then you learn the language by making the tests pass one at a time. The term kōan was taken from Zen Buddhism.

Most links seems to go to these two: github.com/mrdavidlaing/javascript-koans and github.com/liammclennan/JavaScript-Koans. Both must be downloaded and run locally. Or exercised in an online IDE, like Cloud9.

Examples of interactive learning sites

Steve Sanderson has created a very nice site for learning KnockoutJS MVVM online. It’s at learn.knockoutjs.com. All libraries should have a site like that.

Codecademy – at codecademy.com – for learning JS from scratch (so far). They are only a few months old and there is not a lot up there yet, but the concept looks very promising.

Finally John Resig has a small Learning Advanced JavaScript tutorial meant to accompany his book Secrets of the JavaScript Ninja. Seems to be fun to play around with, even without the book. Anyway, the book looks like an interesting read, so one probably end up wanting the book to accompany those tutorials...

And then there is node.js, CoffeeScript, Tame.js, and…

Here are a few more examples, which I’m researching on as well:

- jasmine – at pivotal.github.com/jasmine/ – BDD/TDD test runner for JS. We use this at work. It’s just amazing how much faster JS tests are running compared the usual C# stuff; milliseconds is the norm for hundreds of tests – without compiling and warming up …

- QUnit – at github.com/jquery/qunit – is another well respected test runner. It’s the one used in the jQuery project.

- node.js – at nodejs.org – an event-based, asynchronous server framework for writing web servers (and other kinds of servers) in JS, executed by V8 (and written in C itself). Video hint: the second video linked on the front page is the best. A good guess is that this little thing will change the way web devs work fairly quickly…

- CoffeeScript – at jashkenas.github.com/coffee-script – a new programming language built on top of JavaScript. The language is an attempt to weed out all of the bad parts in JS. It also gets rid of a lot of the C-like fluff in JS, like semicolons; (parentheses) and {curly brackets}. And it adds a bit of difficulty to debugging, because you have to debug the JS it’s compiled into – not the CoffeeScript code itself. You can run CoffeeScript directly in the browser – directly on the home page (click “Try CoffeeScript” in the head). There is a good tutorial at coffeescriptcookbook.com.

- tame.js – at tamejs.org – a nice little JavaScript extension, that eases up async programming by adding an async/defer constructs to JS. It’s a testament to the power of JS’ dynamic nature that this is done right now. No need to wait for a new compiler, like you have to for C#5’s async/await features. The trade-off is the same as for CoffeeScript: it’s based on a pre-compiler, so all debugging must be done against the JS it’s compiled into. Tame.js seems to be a good match for node.js. When I find time for this, I think I will also have to look at caolan’s async library…

And then there is just Soooooooooo much more out there…

d3d18e86-c159-468a-b1fa-92c7ea1ffc2c|0|.0

Published Monday, 19. September 2011, 00:37, Danish time.

Handling client-side state in round-trip based web app can be fairly tricky. The tricky part is when and how to save changed state during the entire session the user has with your server and how to synchronize state seamlessly between the client and the server along the way without slowing down your pages. The statelessness of the web will most probably challenge you now and then.

There are a number of approaches and techniques that can help you and they all have their sweet spots, but also built-in downsides and often unwanted side effects. As in a lot of other areas in software development, it is often important to know much data, you need to handle and what kind of security constraints, your solution should work under.

Classic approaches to handle state across round-trips

In order to handle state in you application, there are a number of classic techniques you can use, each with their own built-in pros and cons. None of them are perfect, as you will see. Below is a brief discussion on pros and cons for the various techniques.

This section of the post turned out to be fairly dense, but I think it’s important and you can really get into trouble, if you don’t know these things.

Here goes:

- Storing state data in cookies was the original solution to the problem. It’s used on almost every web site out there for storing user ids and session ids.

Pros: (1) good and easy for small amounts of data – 4K is considered the limit, generally, but you should never go that far, (2) data can be encrypted by the server – but is then of course inaccessible to the client-side code. Cookies is also where the server stores user- and session ids between requests in all frameworks I know of. (3) Data is personal and bound to the user and the pc he is logged in to.

Cons: (1) cookies can be stolen by malicious software, (2) the browser submits the cookies related to a given hostname in any request to the server, including when requesting .jpg, .css and .js files, so you will hurt performance, if your cookies are to big.

- State data can also be stored in hidden HTML input fields.

Pros: (1) This way, you can store data in a way that is invisible to the user. (2) If the field is part of a form, its content is automatically submitted as part of the form, when the user submits his input user bookmarks your page, the current state is not saved with the bookmark.

Cons: (1) Transporting the state back to the server, requires that the page is posted back to the server (for instance by the user pressing a submit button) or that some client side code reads the fields and send them. (2) If the data is not encrypted (and it’s not, unless you do it), it can be manipulated easily by a hacker, so you will have to validate it (and you should do that on every round-trip). You should of course validate the incoming data anyway before you stick anything in your database. (3) Remember, that post-backs does not “play well with the web”, because it’s not “back button compatible” (see below). (4) If the user bookmarks your page, the current state is not saved with the bookmark.

- A variant of storing data in hidden fields is ASP.NET WebForms’ hidden field named ViewState. It’s used by internal WebForms logic to store the state of the view, which in this case is select properties from controls in the page and also a few other things.

ViewState has the same pros and cons as normal hidden fields, but makes things worse by encrypting data which makes it grow in size. If you develop using the built-in grid components and don’t watch out, you can easily end up with a page weight of say 300K or the like. Then your stuff is suddenly only suited for Intranet usage. This has caused many WebForms developers to spend lots of time on fine tuning pages by disabling ViewState on individual controls in their pages. To be fair, Microsoft did put a lot of effort in improving this in .NET4, but you are still stuck with the post-back requirement. It should also be noted, that it’s fairly easy for a hacker to open up the encrypted ViewState and start experimenting with application’s internals.

- You can also store state data in all links in your page as query string parameters. This will cause the browser to send the parameters to the server when the user clicks a link. This method has been used a lot by big sites like Amazon and EBay.

Pros: Simple and robust solution, if you can live with the cons and with the fact that your state is public and easily changeable. In Amazon’s case, where I assume they just use it to determine, say, which sidebar ads they want to display on the next page, it is hardly a big problem, if somebody experiments with the values.

Cons: (1) Results in ugly URLs. (2) Has the same performance downsides as described for cookies because the browser also sends the URL of the referring page to the server in an HTTP header as part of every request. Actually, this will cause the browser to send the state data twice when you click a page link. (3) Web servers generally “only” support URLs up to a size of 2K characters. (4) It’s a lot of work to “patch” every link in the page to include all the state data. Functions for this is not standard anywhere, I think (– at least not in ASP.NET). (5) It’s easy for the user to start hacking on your site by messing around with parameters. (6) Foreign web site will get a copy of your state values, if you link or redirect to somewhere else. (7) Client-side code cannot easily interact with these parameters.

- Fragment identifiers are are a lot like the above query string parameters with regards to pros and cons. Fragment identifiers are the part of the URI that is after a “#” (hash mark). Like “Examples” at the end of: http://en.wikipedia.org/wiki/Fragment_identifier#Examples. The original idea behind it to allow the user to jump quickly between internal sections of a web page, and hence the browser doesn’t request a new page from the server when the user clicks local fragment identifier links on a page. This also means that communication with the server involves sending data using client side code.

Widespread use of the fragment identifier for keeping state is fairly new and almost exclusively used web apps with large amounts of client side logic, so I wouldn’t really consider it a classic technique for storing state, although it has been doable for a lot of years. I consider this technique one big, clever hack, but it works beautifully and I think that we will see this everywhere in the near future.

- Last, but not least, you can use server side storage, like ASP.NET’s Session State. This is usually a key-value table that resides in memory or is stored in a database on the server-side.

Pros: (1) virtually unlimited storage compared to the above methods. (2) Only a small identifier is transported back and forth between the browser and the server – usually this a session key in a cookie.

Cons: (1) It doesn’t scale well. Letting users take up extra memory means less users per server, which isn’t good. (2) If you stick with the in-memory solution, you will get server affinity, meaning that your user will have to always return back to the same server to be able to get to his data. If your server sits behind a load-balancer, you must use “sticky sessions”. (3) If/when you server crash or is restarted, you loose your data and kick all your users on that server off. (4) These two cons can be avoided by storing session data in a database, but then you pay by loading and saving data from and to the database on every request, which is generally considered “expensive”. (I wonder if not the new, high-speed no-SQL databases, like MongoDB will change this picture soon.) (5) You can’t keep these data forever and will have to delete them at some point. On ASP.NET, the default expiration time for session data in memory is 20 minutes (less than a lunch break). You will turn some users down no matter which expiry you set. If you stick your session data in a database, you will have to run clean-up jobs regularly. (7) Again: client-side code cannot easily interact with these parameters.

Phew. There is a lot to consider here! As I said, I find it important to know both pros and cons, in order to be able to make right architectural decisions.

REST – specifying everything in the request

It’s perhaps appropriate to also mention REST in this post, because it gets a fair amount of buzz these days (even though it’s been around since 2000). And because is about managing state. REST means REpresentational State Transfer.

When evaluating REST, it’s fair to say, that it has the same pros and cons as I listed with query string parameters above (- although REST is using more transport mechanisms than just query strings). The reason is, that one of REST’s novel goals is to make the server stateless, in order to gain scalability. And making the server stateless, of course means that the client must send all relevant state to the server in every request.

So, if you are dealing with complex application state, REST in combination with round-trips will most probably make your requests too heavy and your app won’t perform well. It is however very usable if your app hasn’t got a lot of client-side state to handle. And it’s also a very good way for a “fat” client to communicate to the server.

REST is however a very sound architectural style and it would pay off for most web developers to know and use it everywhere it’s applicable. In the .NET world, REST has not yet caught on well. It’s a pity, but I think it will. It should; it captures the essence of the web and makes you’re your app scalable and robust.

Future options for keeping state on the client side

In the future you can save state on the client side, but it will take years before this is widespread. Google pioneered this with Google Gears, which gave you an in-browser, client-side database and for example enabled you to use Gmail while you were disconnected. This was a good idea and it has now been moved in under the HTML5 umbrella, so the old Gears is now deprecated and will disappear from the web Dec, 2012. I should also mention, that similar features exists in both Silverlight and Flash, which should be no surprise.

What HTML5 will bring, is a number of options:

I won’t go into these at this time; the browser vendors haven’t agreed on these yet and as with other HTML5 technologies, it’s still on the “bleeding edge”.

Also: if you plan to store data more or less permanently on he client-side, you better have a plan for a very robust data synchronization mechanism that must kick in, when the same user uses your app from many different devices, as well as robust protection against evil, client-side tinkering with your app’s state.

Conclusions, perspective and “going client/server”

It’s important to recognize that, as a web app developer, you inherit intrinsic problems from the web’s architecture and dealing with state across round-trips is one of them.

A lot of different methods for dealing with this has been developed over the years and all of them has their built-in downsides. Hence, you must choose wisely. One of the above methods might be just what you need. Or might not.

If you need to deliver a really sophisticated UI, then my take is that basing your solution on the round-trip model won’t cut it. The web was not made for sophisticated UIs, so the classic toolbox doesn’t cut it.

There are ways to get around the limitations however: you simply don’t base your solution on round-tripping. You “go client/server” and create a full fletched JavaScript app that lives on a single web page (or a few perhaps). But that’s an entirely different story… and it will have to wait until another time.

While researching links for this post, I came across this MSDN article that seems like a good read: Nine Options for Managing Persistent User State in Your ASP.NET Application. Especially if you work on the ASP.NET stack.

1aeacfdb-fca7-4f7c-8722-2978d8e4c06f|0|.0

Published Thursday, 8. September 2011, 19:41, Danish time.

Originally web application technologies was designed around the notion of round-trips. The web was originally made for hypertext with the possibility for some rudimentary input forms and developers could deliver solutions to simple input scenarios fairly easily. Things like sign-up on a web site, add-a-comment functionality, entering a search and so forth was easy to realize. You also had cookies for maintaining state across round-trips. Only much later scripting languages was added for a greater degree of interactivity.

Common knowledge. The web was meant to be a mostly-output-only thing.

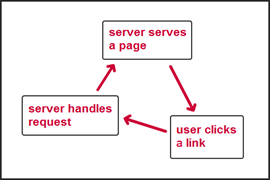

For application development, the model was basically the same as for hypertext sites:

- the server serves a page,

- the user interacts and clicks something or submits some input

- the server serves a new page or redirects

It is still like this today: if you can live with the limitations in this – very – primitive model and state storage, building sites is still easy and have been for many years.

But this simple model is not enough. Real application development requires a lot more and can also be easy – as long as you stick with what the tools were meant for.

Web frameworks knows about round-tripping…

Modern server-side web frameworks like Ruby On Rails and ASP.NET MVC was designed to play along very well with the web’s round-trip model. The basic stuff is taken care of; mapping of incoming requests to some logic that handles them, map incoming URL parameters and posted user input automatically to parameters in method calls in your code. You then do your processing and return an updated page or send the user off to somewhere else. These tools take care of all the nasty details: handling the HTTP protocol, headers, cookies, sessions, posted values, encoding/decoding and more.

Also Microsoft’s older ASP.NET WebForms framework is easy to use and works fairly well for these kinds of solutions, although I would argue that it’s best suited for Intranet sites. WebForms was directly designed to enable Windows developers to develop apps as they were used to, to enable component based development and to try to hide the complexity in web development. This (again) works well as long as you don’t try to “bend too many water pipes” and just use it for what it was designed for (and if you are careful with those grid components). There are, however, a number of design issues and I will explain one of them in a bit.

In general, challenges start when you want to go beyond that simple round-trip model. What typically happens is, that you want a solution that provides a richer client-side experience and more interactivity, so you need to do more in the browser – typically by using plain JavaScript, jQuery or a plugin like Flash or Silverlight. Now the web starts to bite you.

What if the user changes the data and then presses the Back or Close button in the browser? Then his changes are gone and in many cases you can’t prevent it. He could also press Refresh in the middle of everything or loose his Internet connection. Stateless architectures don’t really handle these problems well, so it’s up to the developer to take care of it. You will also be hit harder by different quirks in the many browsers out there.

The problem with repeatedly posted input

One particular problem, that is shared among all browsers, is the issue with “playing nice with the back button”.

This is what happens: after the user has filled out a form, submitted his values and gotten a page back with the updated values, he now presses refresh in his browser. Or he proceeds to another page and then presses back to get back to the one with the updated values. In this case, the browser prompt the user with a re-post warning: is he really sure that he wants to post the same data again? This is almost never his intention, so he of course replies "no" to the prompt. Or he accidentally replies yes and typically ends up in some kind of messy situation.

The average computer user have no clue what is going on. Or what to answer in this situation.

I am pretty sure that the above problems are the historic reason for the prompting is that many sites over time have had too many problems with handling re-post problems; if you bought goods in an Internet shop by posting your order from to the server and then posted the same form again by pressing refresh, you would simply buy the same goods twice. Or your database would be filled with duplicate data. So they just put pressure on the browser vendors and convinced them to do symptoms treatment …

As a side note, my current bank's Internet banking web app simply logs people off instantly - I guess that they were simply afraid of what could happen... Or that their web framework had them painted into a corner of some sort.

So is it really that hard to avoid this problem in code? The answer is no. There are a couple of fairly easy ways:

Fix #1: the Post-Redirect-Get pattern

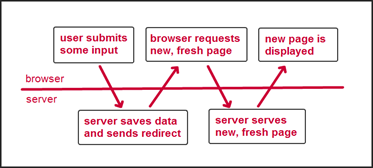

This is a simple and well proven pattern. The solution is, that every time the user has posted some input, you ask the browser to read a fresh and updated page, containing the user’s changes. Instead of doing the default thing and just respond to the post request with some updated page content.

This is known as the Post-Redirect-Get pattern (PRG). The name comes from the sequence: the user POSTs some input for the server, the server saves the posted values and the server responds with a REDIRECT header to the browser, which then in turn issues a GET request to the server in order to load a fresh page, typically containing the user’s newly saved data. By using PRG, the user’s back button behaves nicely by not prompting the user, if he really is sure about his back-button action.

Fix #2: stamping each page with a unique id

This is what you do: (1) stamp every input form, you send to the browser with a unique id (usually a globally unique identifier / Guid), (2) let the user do his thing and submit the form and then (3) before the server stores the input (along with the id), it checks that the id wasn’t stored once before. If the user already stored the the input once, you could show a message and/or send him to a page that displays the saved data in the system.

Besides making sure that the submitted data doesn’t get saved twice, this also adds a nice robustness to your system. A good thing.

I can’t help to think, that if everybody had done like this, we wouldn’t have needed those re-post warnings in our browsers in the first place.

With today’s browser, you of course do get the re-post warning – so you should ideally combine this approach with PRG to get rid of that. But no matter if you do that or not, your database will never be harmed.

ASP.NET WebForms troubles

It’s a testament to how hard it is to get everything to fit, that Microsoft didn’t implement PRG as a default behavior in WebForms, which more often than not has caused WebForms solutions not to play nice with the user’s back button.

Let’s illustrate the design problem by discussing a WebForms page with a sortable grid. Initially, WebForms would normally stick the grid component’s column sorting settings into ViewState (a hidden field in the page), in order to be able to do a compare later. When the user clicks a column header, WebForms would submit the column index or something like that to the server, which then in part would do the compare, carry out the sorting, save the new state of things in the page’s ViewState and return a nice sorted grid to the user. Now at this point, the site has broken the browser’s back button, so if the user wants to refresh the data, he cannot press the browser’s refresh button without getting the re-post warning. Perhaps worse, he also cannot forward a link to the page with the sorted data via email, because the sorting information isn’t represented in the page URL.

Now, one way of solving this, could have been that a click on the column header would redirect to the page itself, but now with a URL parameter containing the unique name of the grid (= the control’s ClientID), the column header index and the sort direction. And Microsoft could have generalized that in some way, but it would result in unbelievably ugly URLs and also introduce a number of other complexities. And you also don’t see other situations where WebForms controls automatically read parameters directly from the incoming GET requests.

So I figure they just decided that it was a no-go. But I of course don't know for sure. What I do know however, is that if you just go with the flow in WebForms, your users will get re-post warnings.

Wrap-up

As you have seen, you easily run into irritating problems, even when you just want to develop fairly straight and simple web apps. It’s often an uphill struggle just to get a simple job done. And that it’s a lot harder than it could have been, had web apps been part of the design of this technology in the first place.

Web technology was originally designed for hypertext output. Not apps. And it shows.

65c38570-b83a-46f5-9658-49e5a1d93fb2|0|.0

Published Saturday, 3. September 2011, 13:44, Danish time.

Ooups, 3 months 8 months 10 months went by! – Without any posts. – Makes me a bit sad. – Not what I had hoped. What happened?

I got a new position at work. I am now Solution Architect, Lead Developer in a new team and supervising architect for two related teams, one of them in India. All while finishing my former project for the first couple of months. And I’m still naïve enough to try to get some coding work done every sprint.

At the time around my last post, back in late 2010, I also had a vague plan to move towards the Silverlight stack, with MVVM, Prism and all sorts of automated testing, but that’s not how it turned out.

I got the offer to work with a small and highly skilled team, working with an interesting stack of new web tools. And the product, we are making is relatively isolated, have a happy business owner and is fairly self contained, so we’re like on a small, tropical island in the big, dark, corporate waters (said with a smile, of course).

I will probably write more about the technology stack, we are using, but here is the quick list. It’s an “HTML5” app, so our tools are of course split mentally in two. On the client side, we use:

- Lots of jQuery and pure JavaScript, written in functional style and with object oriented constructions. We use a fair amount of libraries, plugins and polyfills.

- New UI modules are done using KnockoutJS and jQuery Templates.

- Others are using jqGrid.

- CSS is “rationalized” using .LESS.

- CSS and JS is minified and bundled using a homegrown asset manager.

- The new stuff is done using BDD using Jasmine on the client side Javascript business logic.

- We are trying to get up and running with Specflow driving Selenium 2 (with WebDriver) for BDD-style integration- and acceptance testing (– to drive development on an overall level).

On the server side, the stack is:

- ASP.NET MVC3 for producing HTML and as a JSON server (using MVC3’s JSON binding support). We use C#.

- A new, homegrown shell-with-runtime-loaded-MVC-modules architecture, based on MEF (and a bunch of crazy tricks). We built it ourselves, because we couldn’t find any ASP.NET MVC plugin/shell frameworks out there at the time (December 2010) and we needed to empower our team in India, without ending up in merge hell. We spent a lot of time in Google. Shocking!

- A mix of new Razor & older ASPX WebForms views (for now).

- Unity for dependency injection.

- RhinoMocks for faking stuff.

- MSTest for unit tests (couldn’t find a proper product description).

- And again Specflow to drive the integration- and acceptance tests.

- Database is MS SQL Server with T-SQL stored procedures.

On the tooling side, there is:

A lot of the new Javascript tooling was new to me. Learning about and practicing duck typing, monkey patching, polyfill’ing old browsers and working with a dynamic language have been very exiting and enlightening. And taken a lot of time & effort.

Also, on the softer side, I introduced mind maps on requirements gathering meetings and wireframes built in MS Blend SketchFlow. Introducing these two was really exiting; out went the boring, traditional process of throwing mails with Word documents at each other and going through long, monochrome bullet-lists during even longer meetings. In came lively meetings, engaged business owners and confident decisions. Definitely a change to the better. Highly recommended. Hope to get back to this later.

I must admit, that all this really stole my mental focus and didn’t leave me much energy for writing blog posts. And I miss the clarity, it brings me. So I will try to get back on track.

0e37c499-86e8-432b-b2cb-5ac327be5104|0|.0

Published Tuesday, 2. November 2010, 08:09, Danish time.

Web technology has a lot of constraints and a lot of domain specific “laws of nature” that you must obey, if you want success with your web solutions. I think of these laws of nature as “domain laws” in lack of a better term. The web can be pretty unforgiving, if you don’t take these laws into careful consideration when you design you web app solution.

It takes time to learn these domain laws. For me, it has taken a lots of fighting, creativity and stubbornness, to crack the nuts along the way. And a lot of the learning was done the hard way – by watching apps, logic and naïve design fail when exposed to the sheer power and brutality of the web.

It can be very hard to identify these domain laws. Often it’s an uphill struggle. Lots of technology people will fight for your attention in order to be able to sell their products and services to you – be that web software, operating systems, consulting services or simple platform religion. They would rather prefer you to focus on their product than to understand the domain and get critical. The assumption is of course that a sound web technology will work with the domain laws of the web, saving you from spending time on all this. But the reality is that most products have an old core technology, a wrong design and/or that they lag seriously behind in one or more areas. – Once you have a lot of customers and a big installed base, you don’t rewrite the core of your product – even when the world has moved and you really aught to. That’s just how the mechanics of this works.

This is not to say that you won’t benefit from going with a certain technology or to say they can’t have good things going for them, but just that every product usually has serious quirks in one or more areas, and that these quirks often are tedious to a degree, where it doesn’t pay off to go beyond what the specific technology is good at and meant for.

In my mind this aspect is more important in web apps than in most other kinds of software solutions and the problem here is that web applications are very hard to get right. There are so many factors that work in opposite directions, that getting it right to a large degree is about doing it as simple as you can, by choosing functionality that you can live without and then down-prioritize that in order to have a chance to get all the important functionality right. Or if you prefer it the other way: identify what is really important – and then focus intensely on getting that right.

But even when you focus intensely on choosing the right solutions, there are lots of tradeoffs you have to make. You often have to choose between two evils. Hence, some good discussions on pros and cons are very beneficial, in my mind.

What’s a Web App?

Let’s first limit the scope: in this series,I will only be discussing “web applications”. I define web apps as web sites that are more than just hypertext (text and links) and simple interaction. A good example of a hypertext site is Wikipedia. Hypertext sites are what the web was originally made for – and web technology still work beautifully for these purposes.

Web Apps are also web sites that it doesn’t always make sense to index by search engines. Examples of these are web based image editors, your personal webmail, a web based stock trading app and lots more. In fact, anything personal or personally customized. (- Not that you don’t want search capabilities in these apps, of course.)

Then there are web sites like Facebook, Flickr or Delicious that are somewhere in between hypertext sites and what I define as web apps. For these, search engine indexing and links from and to the outside makes a lot of sense, but at the same time they definitely offer a customized experience for the user. They work fine as quick-in, quick-out sites, but they are not really tools you can use 40 hours a week. Flickr is not Photoshop. Another attribute is that these sites usually feature some kind of publishing process: the user prepares content for the public web and publishes it afterwards. Making content available on the web usually plays a big role in these solutions.

As a contrast “a real app” often doesn’t have that. It is much more concerned about delivering a highly optimized flow for the work, the user uses it for. Think of an accounting app, a development tool or a CRM system – in these the user delivers work and the more they can be customized, the better. With regards to search indexing, it doesn’t make much sense to index a development tool – indexing the programming code and the built-in help system makes sense. But not the rest.

Who is this for?

This content is in the category of articles I never found on the net, when I needed them myself. Maybe it was just me that didn’t know what to search for back then – or maybe it’s just not out there. Anyway: very soon my version will be here.

I figure and hope these posts will benefit other developers that are new to web apps but might be experienced in other areas of software development.

I call the series for a “domain analysis” because I will try to gather all thing web development in one place. The posts will be about both the aforementioned domain laws, the do’s and don't’s in web development and architecture and about the really ugly gotchas, that you can run into in web app development. But I will only include things that I think are very important to know.

Originally I planned for this series to be a single all-in-one post, but when I started writing this thing, it just kept on growing on me. So I decided to split it up into multiple parts. This also makes it easier for me to spend a little more time on each part, while still being easy on myself and not getting all stressed out about it.

Post index and links to posts

I will update this index and it's links, as I get the parts completed.

79544398-2834-4cae-a23b-7b248a8f1b17|0|.0

Published Friday, 15. October 2010, 20:22, Danish time.

My story about what’s in the “CodeSoul” name and “the rare sense of code with soul” is now online. You can reach it by clicking the link in the hello there box in the upper right corner – or by clicking here.

79bfd696-e058-4f44-beaa-dd9ce48f1e7b|1|4.0

Published Thursday, 14. October 2010, 00:01, Danish time.

There is a certain “misfit” that I have heard about from many good devs. Lots of potential productivity and quality results that are arguably not being harvested in the majority of big companies out there.

There are certain “laws of nature” that rules in this field. I will try to describe some of them here.

I think that a lot of it has to do with how developers are managed by managers.

The problem is that you only unleash a fraction of the potential benefit you can get from employing a clever developer, if you only see him/her as a sheep you have to herd – as a naïve soul that has to be lead. Modern management culture that practices lean and agile processes and work with their developers – instead of trying to lead them. But “modern management” of that quality is rarely found in the average enterprise company.

Development talent is a very different talent than management talent. Organizing systems, data and code are complex tasks, just as organizing company processes and departments and managing strategies and people. Developers handle very complex technical challenges and team dynamics. Likewise, managers need to handle other aspects in parallel, like marketing strategies and legal affairs.

I will argue that in many organizations, the average developer is at least as intelligent as the average manager.

But how do you work with nerds?

Well, the first thing you do is to cooperate. Work with your developers, in order to get the synergy going and benefit the most.

Many managers turn their back on skills and knowledge

Developers and managers live in different universes, so you have to bridge them somehow and – as always – communication is the best mean for that. And – again as always – communication is damn hard. In this case, it seems to be particularly difficult. One main limiting factor is management vanity, if I’m not mistaken: it’s apparently hard for many managers to admit limited knowledge. But it’s really not the manager’s role to know everything anymore. Maybe it was like that in a distant past – even though I really doubt that that concept ever really worked in the IT industry.

Many managers became managers because they wanted to lead other people and wanted to be in control. Many went straight into a theoretical management education. Often this isn’t a good thing if you have to cooperate with your employees rather than to lead them. Some will rather pay expensive management advisors to analyze their business, than engage with their employees in order to surface the steps the company needs to take to go forward. More often than not, there is already plenty of knowledge to harvest inside the company.

Often managers also sold themselves to the company for a high price, which leads to high expectations from above, which again leads to pressure, which again makes people try to show off by coming up with monstrous master plans without first having learned sufficiently about the business and the involved technical domains first. This pattern has been repeated over and over.

Now, I know that I am being a bit tough here – and of course there are also a great deal of great managers out there. But I definitely think that there is a trend here.

I’m also not saying that developers always communicate perfectly, nor that they aren’t partly responsible of failing communication, whenever that happens. That’s not my point. My point is that many managers become responsible for poor results, because they often don’t activate the good skills in their organizations. Managers must produce through their employees. So they must ensure that employees work optimally.

Then there is the money aspect...

You could say that all this ultimately is about what you get for your money. IT projects usually become very expensive when the organization doesn’t work on the right things…

The innate geek pride and enthusiasm

There is a close relationship between skills and enthusiasm. If you love what you do and take great pride in your work, you usually get pretty good at it.

This is especially prevalent for software developers – most developers loves to be good at their thing. And for a software developer, delivering a good job usually includes delivering something that makes sense for their users and customers – something that’s stable, robust and fast.

Delivering a good software job is usually well aligned with what’s make sense business wise.

This kind of care for what you do is present in most developers I have met. You can just ask them about their best projects, and you will see a person that lights up in enthusiasm. This is kind of unusual when you compare to lots of other professions; I guess that this profession somehow attracts people with this mindset – or maybe you just don’t keep on doing software if you’re not this kind of breed.

Sadly, you only see pride and enthusiasm in action all too rarely in the typical project work in the typical enterprise. And to me that’s just lots of wasted potential…

I don’t think though, that it’s too hard to activate the geekiness in most cases. And this is quite paradoxical considering the difference that engaged employees make to any business.

Surface the business challenges in order to engage

Posing challenges to developers and being transparent about business challenges and the competitors’ moves is often enough to activate the synergy. A company with a closely coupled business unit and development department will often do magic and also usually moves forward all by itself. By “closely coupled” I mean that developers should talk to business people directly – not through several layers of indirection, like through managers that talk to other managers or company councils that maintain written lists and distribute documents between the two parties. You need the direct contact in order to make the challenge real.

This setup however “reduces” the manager’s role to be the one that pushes gently in the right directions when changes are needed. This will usually be a simple task involving only to explain the new challenges to the involved people.

What will separate the good managers from the bad, will be how they stay on top of things and how, when and why they start pushing.

The devs will then take great pride in delivering the magic to make things happen business wise. I bet you they will! Trust me!

I’m more concerned that this “reduced” role doesn’t really satisfy the manager involved… (and if this happens, I think that the only realistic solution for the developer is to replace their manager…)

0f88fd2d-b600-4991-9aaa-3854107770fb|0|.0

Published Friday, 1. October 2010, 00:53, Danish time.

Over time there has been a lot of ranting and quarrels on the net about ASP.NET WebForm’s “name mangling” – which is WebForm’s computed ID attributes on HTML elements generated by server side WebControls. Just try to search Google for “ASP.NET” + “name mangling”. The sum of web designer work hours spend worldwide on fighting with this in ASP.NET’s life time must be astronomical.

I have read a lot of these discussions and it puzzles me that I never found a good description of the root cause in any of these discussions on the net.

It's really a basic and unsolvable conflict between object oriented web development and precisely targeted CSS styling.

The problem is of course not unique to ASP.NET WebForms; it applies to all component based page frameworks.

Let’s go through it.

The web developer side of the problem

When you are developing a web page component and deliver it “to the world”, you never know where, how often, and how your component is used. It follows from object oriented thinking (OO) that a class doesn’t know about its container.

If somebody decides to use multiple instances of your component in the same page, and they all render the same HTML element ID, this would violate W3C standards. HTML element IDs must be unique in an HTML page.

In WebForms up until .NET version 3.5 SP1, Microsoft solved this problem by automatically computing the IDs rendered. On a WebForm, controls live inside other controls and the rendered HTML IDs will then basically reflect the tree structure that the controls form. That’s why you see IDs like “MainForm_SideBar_SearchButton”. (In this case, the SearchButton control lives inside a control named “Sidebar”, which again lives inside a control named “MainForm”.)

If somebody then moves the SearchButton control out of the Sidebar, it will get a new ID. Not so nice if you depend on the IDs – which is exactly what web designers tend to.

The web designer side of the problem

Web designers like element IDs.

When you write CSS, the IDs have the highest priority when the browser determines which style to use for a given HTML element. IDs are said to have a high “CSS specificity” and surpass classes and element types. This means that styling against IDs is safe, if you want to be sure that your CSS rule trumps everything else (and right now we totally ignore using local styles – which is bad practice anyway).

If you want to read more about how the browser calculates specificity, I recommend W3C’s description which is short and precise (there is another good description here).

It’s perfectly fine to style against IDs, if what you are styling is HTML in a single, flat .html file. But as soon as you move into the enterprise level web apps – or just work on complex web apps in general – you are not in control of all the HTML being spit out.

The essence of this is, that it's a bad idea to base styling on IDs when working in the world of componentized web pages.

The solution: style against CSS classes

Luckily, with some planning and coordination, it’s fairly easy to make things work using CSS classes instead of IDs. And if you combine HTML element type and CSS class name, you will get a pretty good CSS specificity out of it. This way you will lower the risk of having colliding CSS styles. You can also just add more classes and element types to a selector in order to give it higher specificity, so “div.layout div.box ul li” will have higher specificity than “div.box ul li”.

There are of course cases, where it makes sense to style against IDs – e.g. a page header showing a logo image. In these cases, consider writing the plain HTML directly in the file yourself, instead of having components rendering it. Then it’s very visible to others what goes on. Oh, and you should not overwrite the Render() method in ASP.NET components to manipulate the rendered IDs – it will just get you into trouble at some point.

More thoughts and afterthoughts

In general, you should think of CSS class names as "the contract" between the developer and the designer. In a component based site, CSS designers should think: “every time an element like this shows up, I want it styled like this”. Things are not as predictable as with static HTML pages, which is why CSS people must think in a more generalizing way.

I think that the CSS specificity rules also show that CSS was really designed to deal with plain, static HTML files – rather than dynamically generated pages.

In WebForms in .NET4, Microsoft has partly solved the problem with the introduction of the ClientIDMode property, which gives the developer better control over the rendered HTML ID, including locking it down entirely by setting ClientIDMode=Static.

But the fundamental OO vs. CSS conflict is still there.

dbc2e752-daa2-448a-a0e4-755b9a52a152|0|.0

Published Friday, 24. September 2010, 01:32, Danish time.

Agile processes are here to stay. But the implementation is hard in many organizations. And the reason for that is that software is a very complex phenomenon.

In short, producing perfect software is simply impossible given the huge number of varying and unknown parts.

Your software has to match functional needs, communicate clearly to users so there is no misunderstandings, perform well, scale well, be simple to operate, be easy to maintain, be bug free, look and feel attractive and more.

And high quality software is crazy difficult to produce: understanding what you actually need is hard, communication between devs and customers is hard, devs don’t always have the skills needed, you learn and get new ideas along the way and your customer’s market is most probably a moving target.

On top of that, management usually presses to – or is pressed to – make the financial future predictable, so they naturally want to freeze the project expenses. And plan use of all the developers in the next hot project that has to start in exactly 2 months. And lock down the features by selling the product in advance to important clients. And …

And when the project is done, your world changes, your competitor makes an unexpected move, the company reorganizes or new technologies get available. And suddenly your perfect software is outdated and needs to change.

There are also several clashes between traditional thinking and the kind of thinking that’s needed in order to be successful in software projects.

In my mind, the most difficult part is to give up on the idea of certainty. In most software projects, certainty is simply not an option. In my experience the most common conclusion when you look at projects in hindsight, is that they didn’t at all evolve and turn out the way you expected.

So what can you do?

You have to act rational and make sure that every step you take forward makes sense. And take great care along the way. If you are really good, you will probably achieve 50% of what you thought you would. If you are really good, 50% of what you achieve will be good things that you never even thought of in the first place.

I think that “making sure” along the way is the key to success. In fact, I think it’s your only chance!

For my part, I learned about “making sure” the hard way.

Getting washed over by waterfalls

Back when I started developing in the beginning of the 90’es, all projects at my job were waterfall projects. We spent an enormous amount on hours analyzing, planning and arranging Gantt charts. It was hell: not only was it very hard to get right under time pressure – you also often had a bad feeling about it, because you were often forced to make brave assumptions. And every detail had to be adjusted several times. People were often pretty stressed out about it and that usually lead to mistakes.

The downsides of the waterfall approach are well known today:

- It only works, if you have complete domain knowledge and know exactly what has to be done in advance. And this is (almost) never the case.

- It doesn’t make room for unexpected problems.

- It doesn’t give you many chances to learn and correct things along the way.

- “The business” (the customer) almost never know their business to a degree where all rules were so well known that a developer could express them in code.

- Using a kind of circular thinking was widespread: if your plan failed, then it was because it wasn’t detailed enough. Then apply more waterfall thinking!

- When the plan was detailed enough, you had more or less done 75% of the total work in the analysis phase. You simply had to – in order to be able to guarantee a successful result. This became known as prototyping.

- Cutting scope was often done by the project manager – who often didn’t know the business that well.

- (I am sure that 50 more items could easily be added to this list…)

It was very frustrating if you had ambitions to make your users happy and deliver well functioning solutions. So grand failures were common. Ironically, the bigger the projects were, the more impossible the whole thing was and the more spectacular failures this method would lead to.

I was very fortunate to start my developer career doing projects where I had a very good domain knowledge myself. I started programming out of need to solve specific problems (and perhaps also because of geeky genes). But even though I thought that I knew my domain stuff well, plans often had to be turned upside down or extended anyway.

It became obvious that there was only one way ahead:

Feedback loops, feedback loops, feedback loops!

You had to communicate. I started talking to colleagues and users every time something was unclear. And every time the plan for a complicated feature was about to be finished and locked down in the project plan I talked to my users to verify the planned solution. Just to make sure.

I would go and ask questions like: “have you considered the consequences of feature X; because of the way it works, you have to carry out task Y in this new way and you can no longer carry out task Z like you used to – is that acceptable?” Or: “if we register your actions during your work, then we can automate the statistics and you don’t have to fill out forms afterwards – but it will mean that your work performance can be measured in detail – what do you think about that?”

There is one particular experience, that I often wonder about: it was often best to hook up with the users that were considered “noisy” by their managers. That was a paradox that took me a while to understand. As I see it now, they were often noisy because of ambitions and pride, topped with frustrations over the state of things. I would go and ask: “what would be the best thing to do?” A simple question with a surprising effect. A fruitful discussion would often unfold. They would be relieved that somebody was listening and I would know a lot more afterwards. Often they became enthusiastic about the project because they could see an end to their frustration. And in several occasions they would become super users and good assets for their employers once the new system was delivered. An amazing paradox!

Back on track: I often came back from lunch or the coffee machine with new and disturbing knowledge and had to re-plan a lot. And re-do Gantt charts and all. Pain! On top of that, this was usually not what made project managers very happy. Getting knowledge about bad design or potential failures when the promises are already made is not nice for anybody… So I had my share of fights…

I had already seen to many failing projects and work process re-designs, so there was really no turning back and I got stubborn about it. Fortunately I was lucky enough to get into projects where the right kind of “close-coupling” with the users were appreciated – because it led to better results.

I don’t think that my story is unique. Fortunately others had a far more generalizing approach and formulated and compiled well thought-out practices like Scrum. But to this day, I still think of Scrum and other agile practices as means to make sure that you are doing the right thing.

Let me elaborate a bit on this.

Scrum is concentrated making-sure philosophy

Here are a couple of examples:

- The ScrumMaster role. The ScrumMaster makes sure that developers can work efficiently without interruptions, have a fully functional computer, and so on.

- The Daily Scrum meeting. Here the team makes sure that they get progress, that no dev is thrashing, that unforeseen problems are dealt with in an efficient manner, and so forth. Constant feedback captures mistakes early.

- The Product Backlog. This is used to collect and store work tasks, so that the Product Owner can make sure that nothing is forgotten.

- The Product Owner must describe and prioritize product backlog tasks in order to make sure that the team always have meaningful work tasks to fill into their sprints.

- The Product Owner must also make sure that all the work done in the project makes sense from a business perspective.

And so forth.

In my mind it gets really easy to understand Scrum, if you view it from this angle.

Today it’s clear that agile thinking has won in most of the software business. But there’s still a lot of people who’s not convinced, or bend the Scrum practices to a degree, where you can hardly call their practices for Scrum anymore. Scrum “developer” Ken Schwaber calls these practices for ScrumButs and even wrote a book on the subject. He found that the re-occurring excuse pattern was “Yes, we use Scrum, BUT … we don’t do X, because of Y explanation”. He has also said that “only 25% of all organizations that embraced Scrum would fully benefit” and I have heard his partner in crime Jeff Sutherland talk about a third. Not good rates. A pity.

The general thinking is that ScrumButs flourishes because it’s hard to give up old habits and old culture.

My point is that you don’t really understand software and software project challenges well enough, if you don’t “make sure”. I don’t think you really have a choice.

---

Here is a couple of additional, interesting links I came across while writing this post:

5064202f-3c40-414f-af9d-f02adc79e080|0|.0

Published Saturday, 18. September 2010, 19:12, Danish time.

So now I got started! I’ve been thinking about “going public” for a long time. But not really found time to do it before now. After playing around with the software and the design during the rainy parts of the summer, I feel that I’m finally set and ready to go.

So why?

There are lots of things that I feel needs to be said. Things that I haven’t been able to find elsewhere myself – be that on the net, in books, in discussions or in podcasts. Things, that I wish I would have found years ago when I needed them. Ways to think about software that made things fall into place for me and gave me certainty and peace in mind. And made it easier to solve problems and construct good software.

I will throw some of them out in the wild – on this blog – and then see what happens. I hope to find that there are people out here willing to share insight and maybe take my thoughts and develop them in new and surprising directions.

Sometimes I feel that I’m alone in the world in my way of thinking about software – that my brain must have been folded in different and crazy ways. But I might just be describing things differently. Time and feedback will hopefully teach me.

I can’t really find any deeply rational reasons to do this. I don’t have a job where anything depends on me doing this – on the contrary: my job usually requires me to stay as focused on the task at hand as possible. I’m also not a contractor or a speaker and I don’t have to write smart stuff on the web to get the good contracts.

It’s more about the soft things. To see, if I can learn from doing this. I find that it’s often a relief to get your thoughts written down. Then you can let go of them and open your mind up in new directions. A blog seems to be a good place for this.

I am exited to see what happens.

Who am I?

I’m a Danish software developer. I’m 44. I have been working professionally first in IT and then software development for 22 years now. Mostly in the financial sector in Denmark. I have a sweet wife and two wonderful kids and lots of interests and responsibilities. My day usually has too few hours.

So it will probably be a challenge for me to find time for writing blog posts.

But I have a plan and cross my fingers.

And now… – on with the writing!

34953065-3a55-4f47-b8ca-3a1acb37efed|1|5.0